Mars Pathfinder

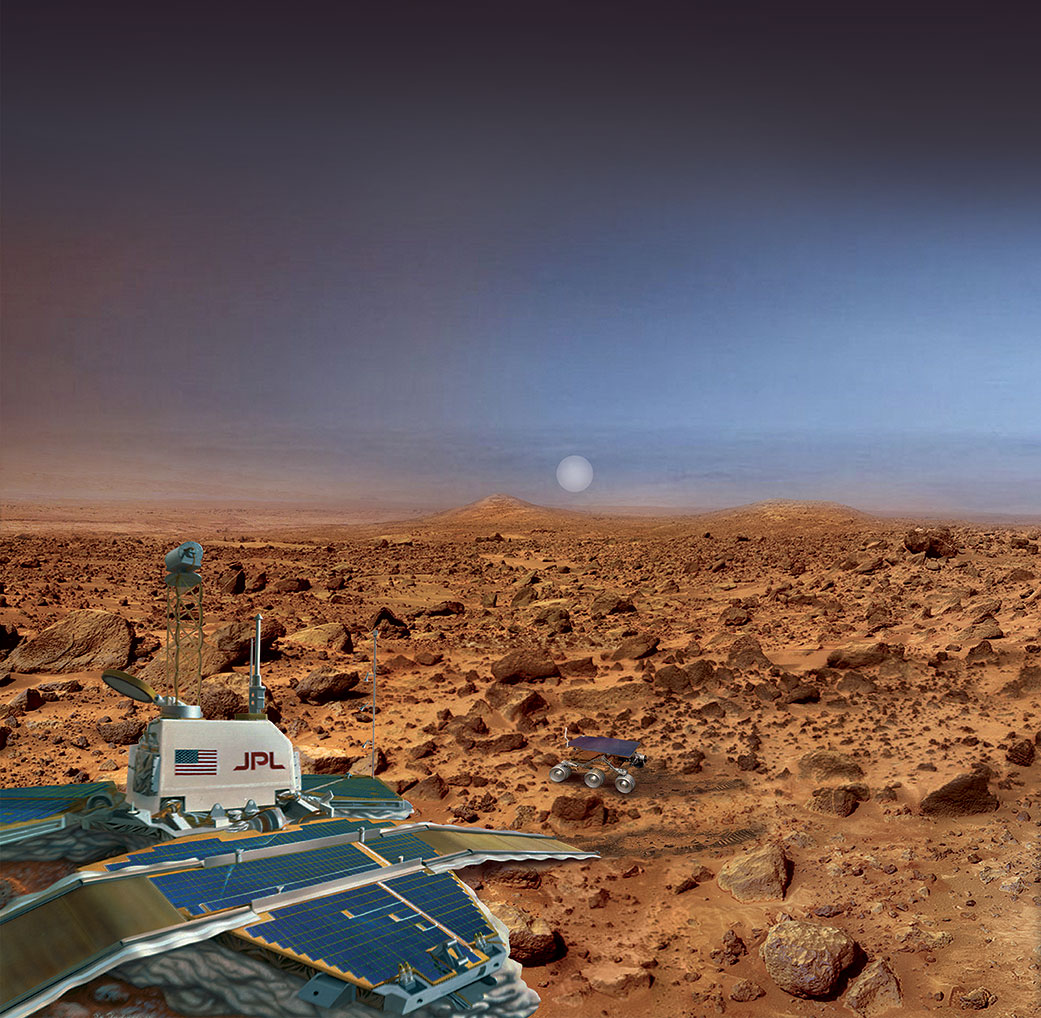

NASA’s Mars Pathfinder successfully demonstrated a new way to safely land on the Red Planet and deliver the first-ever robotic rover, Sojourner, to the Martian surface.

mission Type

Launch

objective

landing

Mars Pathfinder launched Dec. 4, 1996 and landed on Mars' Ares Vallis on July 4, 1997. It successfully delivered an instrumented lander and the Sojourner rover, the first-ever robotic rover to land and operate on the Martian surface. Pathfinder also returned a then-unprecedented amount of data and outlived its primary design life. At a time when the Internet was still in its infancy, the mission's activities captured millions of eyes as people remained glued to their computers to watch anxious and excited engineers and scientists in Mission Control, and to view Mars images transmitted down to Earth.

Key Facts

- Launch Date: Dec. 4, 1996 UTC

- Launch Site: Cape Canaveral Air Force Station, Florida

- Launch Vehicle: Delta II 7925

- Landing Date: July 4, 1997

- Landing Site: Ares Vallis, Mars

- End of Mission: Sept. 27, 1997

Mars Pathfinder Lander

Mars Pathfinder was originally designed as a technology demonstration to deliver an instrumented lander and a free-ranging robotic rover to the surface of the Red Planet.

Both the lander and the 23-pound (10.6 kilogram) rover, Sojourner, carried instruments for scientific observations and to provide engineering data on the new technologies being demonstrated. Included were scientific instruments to analyze the Martian atmosphere, climate, geology, and the composition of its rocks and soil. Mars Pathfinder used an innovative method of directly entering the Martian atmosphere and landing.

From landing until the final data transmission on Sept. 27, 1997, Mars Pathfinder returned 2.3 billion bits of information, including more than 16,500 images from the lander and 550 images from the rover, as well as more than 15 chemical analyses of rocks and soil and extensive data on winds and other weather factors. Findings from the investigations carried out by scientific instruments on both the lander and the rover suggest that Mars was at one time in its past warm and wet.

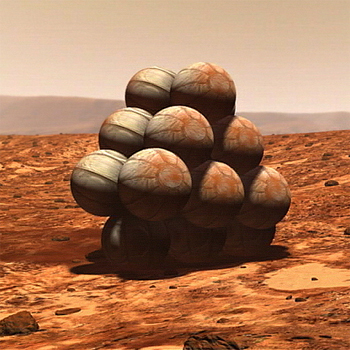

Airbag Landing

Pathfinder used an innovative method of directly entering the Martian atmosphere, assisted by a parachute to slow its descent through the thin Martian atmosphere and a giant system of airbags to cushion the impact. It was the first time this airbag technique had been used.

At a speed of 31 mph (14 meters per second) and measuring 19 feet (5.8 meters) in diameter, Pathfinder bounced like a giant beach ball about 15 times, as high as 50 feet (15 meters), before coming to rest 2-1/2 minutes later about six-tenths of a mile (1 kilometer) from the point of initial impact.

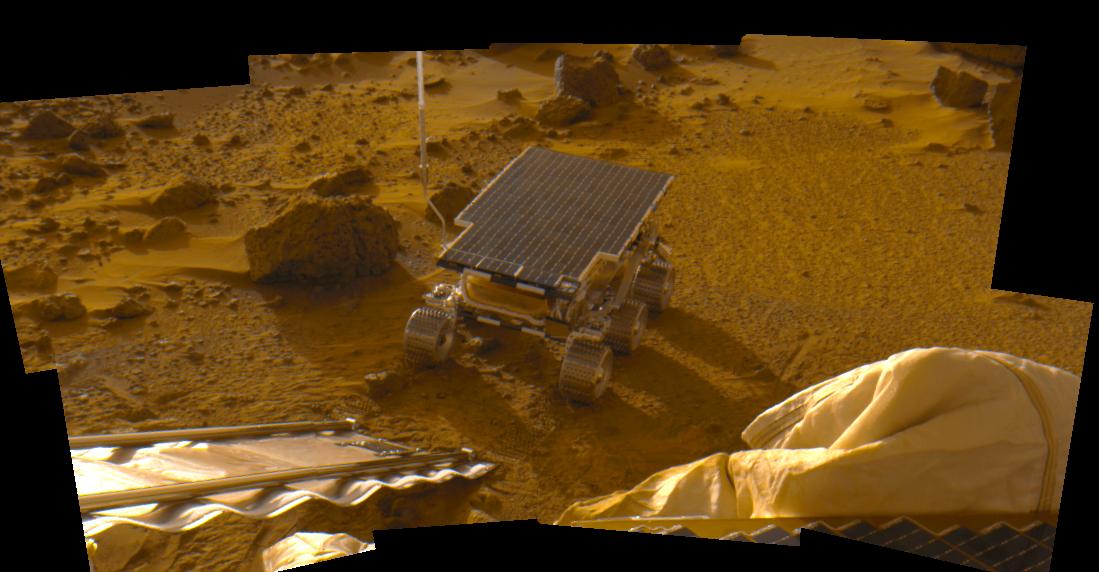

Sojourner Rover

The lander, named the Carl Sagan Memorial Station to honor the famed astronomer who had died the year before, deployed the rover, named Sojourner after American civil-rights crusader Sojourner Truth.

On the night of July 5, late in the second Martian day, or sol 2, Sojourner stood up to its full height of 1 foot (30 centimeters) and rolled down the lander’s rear ramp, which was tilted at 20 degrees from the surface, well within the limits of safe deployment.

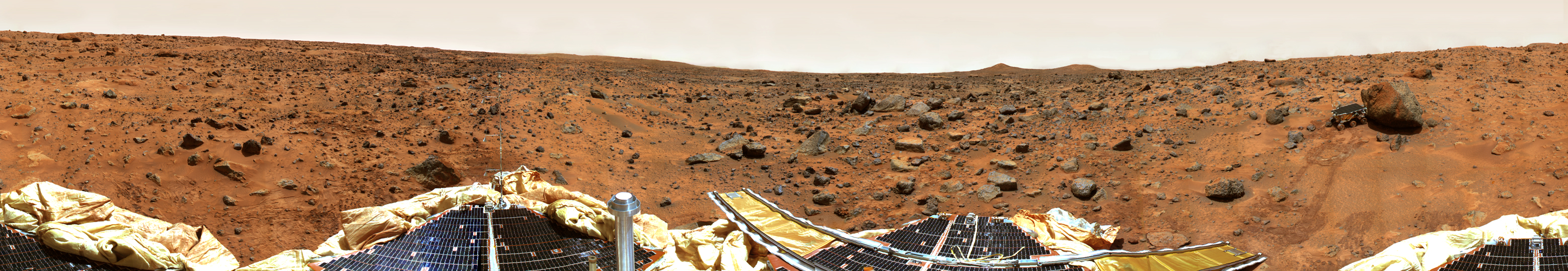

Landing Site: Ares Vallis

The landing site, an ancient flood plain in Mars’ northern hemisphere known as Ares Vallis, is among the rockiest parts of Mars. It was chosen because scientists believed it to be a relatively safe surface to land on and one which contained a wide variety of rocks deposited during a catastrophic flood. In the event early in Mars’ history, scientists believe that the floodplain was cut by a volume of water the size of North America’s Great Lakes in about two weeks.

Mars Pathfinder Science Instruments

- Alpha Proton X-ray Spectrometer: Determined the elemental composition of rocks and soils

- Three Cameras: Provided images of the surrounding terrain for geological studies, and documented the performance and operating environment for Pathfinder mission technologies

- Atmospheric Structure Instrument/Meteorology Package: Measured the Martian atmosphere during Pathfinder's descent to the surface, and provided meteorological measurements at the lander

Mars Pathfinder Science Highlights

- Rounded pebbles and cobbles at the landing site, and other observations, suggested conglomerates that formed in running water during a warmer past in which liquid water was stable.

- Radio tracking of Mars Pathfinder provided a precise measure of the lander's location and Mars' pole of rotation. The measurements suggested that the radius of the planet's central metallic core is greater than 800 miles (1,300 kilometers) but less than roughly 1,250 miles (2,000 kilometers).

- Airborne dust is magnetic, and its characteristics suggest the magnetic mineral is maghemite, a very magnetic form of iron oxide, which may have been freeze-dried on the particles as a stain or cement. An active water cycle in the past may have leached out the iron from materials in the crust.

- Dust devils were seen and frequently measured by temperature, and sensors for wind and pressure. Observations suggested that these gusts are a mechanism for mixing dust into the atmosphere.

- Early morning water ice clouds were seen in the lower atmosphere.

- Abrupt temperature fluctuations were recorded in the morning, suggesting that the atmosphere is warmed by the planet's surface, with heat convected upward in small eddies.

NASA's Mars Pathfinder & Sojourner Rover

Explore the landing site of NASA's Pathfinder mission to Mars with your mouse or mobile device. This 360-degree panorama includes the lander's companion rover, Sojourner, and top science targets.

NOTE: Not all browsers support viewing 360 videos/images.